.svg)

Neural networks + symbolic reasoning + rules + constraints.

In industrial environments, black box models are not enough. Systems must reason within physical and operational constraints.

Neuro-symbolic AI integrates two complementary layers:A neural layer that interprets ambiguous data and generates candidate actions

A symbolic reasoning layer that enforces deterministic rules, constraints, and validation gates

The neural layer reads documents, extracts entities, summarizes state, and proposes actions.

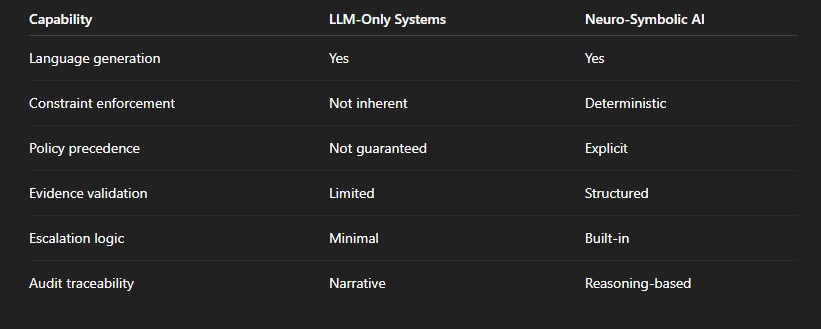

Large language models can generate coherent outputs. However, coherence does not guarantee:

- Constraint compliance

- Evidence validation

- Risk management

- Policy precedence

- Escalation under uncertainty

In high-stakes industrial systems, AI must reason under constraints. It must verify intermediate results and enforce operating boundaries before acting.

Neuro-symbolic AI operationalizes that discipline.

.svg)

Neuro-symbolic systems insert validation checkpoints between steps in a workflow.Intermediate outputs must satisfy defined correctness conditions before execution continues.

Evidence is graded based on provenance and confidence.When inputs are incomplete or contradictory, the system does not guess.

It either adjusts strategy under constraints or escalates.This is essential in environments where data quality varies and failure carries cost.

Explainability in neuro-symbolic AI is not a post-hoc narrative.

Decisions are accompanied by structured reasoning traces that record:

- Which constraints were active

- Which rules applied

- Which alternatives were rejected

- Where uncertainty influenced the decision

This produces audit-ready traceability rather than generated explanation text.Logging shows what happened. Audit traces show why.

A defining feature of neuro-symbolic AI is constraint-first gating.

Before any action is executed, the system checks:

- Safety limits

- Regulatory requirements

- Operational envelopes

- Equipment boundaries

- Policy thresholds

If a proposed action violates constraints or lacks sufficient evidence, it is blocked, modified to a safer alternative, or escalated.

Neuro-symbolic AI preserves neural flexibility while enforcing structured governance.

This architecture is most relevant in environments where:

- Downtime is expensive

- Safety exposure is significant

- Compliance matters

- Edge cases are common

- Data may be incomplete

Energy operations, manufacturing systems, logistics networks, and other industrial environments require AI that is constraint-aware and auditable.

Neuro-symbolic AI combines neural interpretation with deterministic reasoning to enable bounded autonomy in complex systems.

It is not designed to produce persuasive answers.

It is designed to enforce constraints, validate decisions, and operate within defined policy boundaries.In industrial and energy environments, that distinction matters.

Neuro-symbolic AI is an approach that combines neural models for interpreting unstructured or ambiguous information with symbolic reasoning for enforcing explicit rules, constraints, and policies. Neural components generate candidate actions or extract meaning from data, while the symbolic layer evaluates those proposals against deterministic logic before authorizing execution. This separation supports governed, constraint-aware decision-making rather than unconstrained automation.

Large language models generate fluent text and plausible recommendations, but they are not inherently compelled to follow enforceable policies, safety limits, or validation procedures. Neuro-symbolic AI adds a reasoning layer that enforces constraints, applies policy precedence, validates intermediate results, and blocks or escalates actions when requirements are not satisfied. The result is bounded autonomy rather than narrative-driven output.

Yes. In a neuro-symbolic system, explainability is derived from structured reasoning traces rather than post-hoc summaries. The system records which constraints were active, which rules were applied, what evidence was evaluated, and which alternatives were rejected. This produces an audit-ready trail that supports inspection, governance, and operational review.

Neuro-symbolic systems treat uncertainty as an explicit condition. When inputs are incomplete, contradictory, or low confidence, the reasoning layer can require additional validation, switch to alternate data sources, adjust strategy within constraints, or escalate to human oversight. The system does not proceed based solely on plausibility when constraint or evidence thresholds are not met.

Neuro-symbolic AI is particularly suited to environments where decisions must be correct, governed, and defensible. This includes energy operations, industrial manufacturing, logistics networks, and other high-stakes systems where safety, compliance, and operational continuity are critical. In these settings, constraint enforcement and audit traceability are essential.